I just finished a major overhaul to the mesh and animation classes for the engine, and also added a resource cache for more efficient memory management. Before I go into the very lengthy discussion of the changes and what’s next on the horizon here is a screenshot from the latest build.

The Geometry class has now gone in favor of a Mesh class which allows for both cached and dynamically created meshes. Vertex and index buffers are created directly in graphics API managed memory which means they no longer have to be copied from another memory area, thus cutting down on the previous duplicate memory usage. Textures are also now loaded directly into graphics API memory which means the previous duplicate memory usage with the concurrently held Image object has now gone.

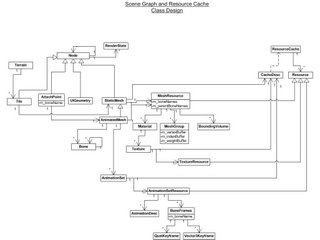

The ResourceCache class is the manager class for any class derived from the Resource class. The resource cache is used primarily to keep the most frequently used resources in memory and also to allow shared usage of this resource data. The resource cache only manages resource objects that come directly from files. It has to be this way as the resource cache has to be able to dynamically load and unload resources and this can only happen if the resource exists on disk.

Resources represent data that can be shared and does not include any instance-specific information. For example, the vertex and index data of a character mesh is resource data because it doesn’t change between two instances of the same type of character. Information that does change, such as local and world matrices, instance names etc. are not part of the resource information. In the example of a character mesh the MeshResource would contain the resource data, and the Mesh class would contain the matrices, instance name etc. The Mesh class references the MeshResource object via a CacheDesc object, which basically describes where the resource comes from (usually a filename). When the mesh needs to access the MeshResource information it makes a call to the resource cache using the CacheDesc object. The resource cache then looks to see if the object is in memory, if it is then it simply passes a pointer back. If it is not in memory then it loads the resource from the CacheDesc and passes back a pointer.

Classes currently derived from the Resource class include TextureResource, HeightmapResource, AnimationResource and MeshResource. The Mesh and Texture classes also allow creation and saving of dynamically created meshes and textures. In the example of the Mesh object this is done by having a direct pointer to a MeshResource object that is not managed by the resource cache (resource cache only manages file-based resources). On creation of a mesh object you specify if the mesh is coming from a file in which case the CacheDesc property will be used, or if it is dynamic in which case the pointer to the MeshResource object will be used.

As you may have gathered from above, models and animations are now exported as separate files which means you can share animations between models which will help cut down on the memory usage and file sizes as well as making it possible to plug new animations into the engine without having to re-export all the models.

Animations are exported as ‘animation sets’. Each animation set can contain multiple animations denoted by the name, start and end frames of the animation. One or more animation sets are then bound to an AnimatedMesh object during run-time which then copies references to the animations to the available animations for that mesh. Issuing a ‘Play’ call to the AnimatedMesh object then sets a reference to the AnimationSet object which is then used to update the bones matrices each frame. At the moment the vertices are updated from the bone matrix to a dynamic underlying Mesh object that belongs to the AnimatedMesh class which is then rendered when the AnimatedMesh object is rendered. In the future this will be replaced with a vertex shader that will pass the matrix values into shader registers and the bone/weight table in with the vertex data to allow the model to be rendered using the static vertex set that can stay on the video card memory without being altered.

Ok, that was a little more technical than I was planning to get for this blog update, but maybe someone will find this more technical information useful. The long and the short of it is that from a rendering and animation standpoint we are now looking pretty solid design wise. Some of the smaller changes that are more visible include adding some fog for distance rendering and a skybox.

The next big changes in store for the core engine will be the implementation of AABB against static and animated mesh objects, a dynamic quadtree class, attachment tags for meshes, more user interface code, indoor environment rendering and a particle system. Once these are done then the real test will be using all the components to build an actual (be it single player) game.

Outside of and before the engine changes though, I must put a static object manifest and static object placement functionality into the terrain editor. With the already written 3D Studio export scripts this will allow world designers to start putting together environments for testing. On this subject I also had a thought to calculate a shadow map for the terrain based on the light positions and object placement. I am not sure how complex this will be, but I think it will add to the overall aesthetics considerably. Priority-wise this is probably pretty low, and it will probably remain a wish list item for the time being.